Let’s self-host!

Get started with self-hosting, using Docker, HAProxy and Let’s Encrypt

Self-hosting is a fun and cost-efficient way to run web services, be it for some silly side-projects, or for miscellaneous third-party services that you want to host for personal usage.

Unless you are motivated to operate a physical machine off your own basement, the easiest option is to rent a (virtual) server at some hosting provider of your choice – say, Hetzner, DigitalOcean, or Your-Friendly-Data-Center-From-Around-The-Corner™. For a few bucks a month, you get root access, decent computing power, and plenty of disk space to mess around with.

In contrast to platform-as-a-service providers that offer fully integrated hosting solutions with an all-inclusive experience (like fly.io, GCloud Run, or Heroku), our own server gives us lots of flexibility at a stable price point. So for the hobby-style projects that we are talking about here, self-hosting is an attractive option.

The probably biggest barriers to entry are to take care of the general server and networking setup, to figure out a smooth deployment procedure, and to deal with TLS certificates. In this blog post, I want to describe a self-hosting setup that I find quite handy, and that satisfies my following criteria:

- There should be a single machine to deploy multiple web services to. These web services can be connected to each other, but they can also be independent.

- Adding new services should require minimal configurational overhead.

- The entire server setup should be easy to reproduce, so most of the infrastructure should be defined in code.

- Deployment should be as simple as running a single command.

- Each service should have a dedicated (sub-)domain assigned to it.

- All traffic should be served via HTTPS.

- It should be possible to re-create the setup locally for testing and debugging purposes.

Non-goals include automated failover mechanisms, horizontal scaling, or zero-downtime deployments.

Overview

These are the basic ingredients of our self-hosting tech stack:

- Docker for running services inside well-defined, reproducible, and self-contained environments.

- Let’s Encrypt (via the acme.sh tool) for obtaining free TLS certificates for HTTPS connections.

- HAProxy as HTTP gateway for terminating TLS, and for dispatching (sub-)domains to specific Docker containers.

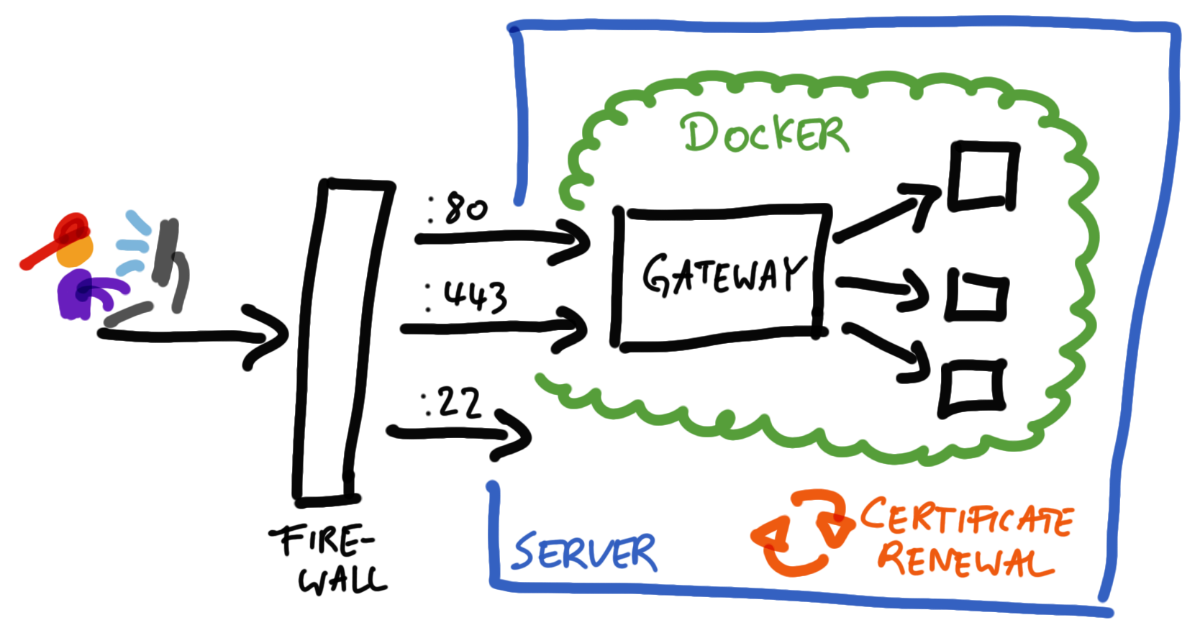

That being said, the control flow for incoming requests looks like this: after incoming requests have passed the (external) firewall of our hosting provider, they are dispatched to the HTTP gateway, which runs inside a Docker container. The gateway examines the (sub-)domain of the request, and then forwards the request to its designated Docker container. These target Docker containers run the actual services, which eventually process and respond to the requests.

Apart from that, there is a cron-job on the machine, which takes care of refreshing the TLS certificates periodically. And, last but not least, the external firewall of course allows incoming SSH connections, for us to manage the server via the terminal.

In the following sections, I break down the individual components in more detail, and describe the initial setup procedure step by step. Note: this guide assumes the following things:

- We own a domain; in this case, say:

example.org - We control the DNS entries for our domain

- We know how to configure our local machine for accessing

example.orgvia SSH.

To illustrate how we can deploy individual services to our server, we are going to deploy a very simple NodeJS-based “Hello World” demo web app, that we want to publish under the hello.example.org subdomain. This “Hello World” app is just for demonstration purposes – we could add a bunch of other services in the same fashion.

Project structure

For making our self-hosting setup happen, we create a project folder on our local machine. This folder contains all relevant files that we need for operating the server, and we can also put it under version control. The project structure looks like this:

deploy1 – a local helper script that we use to deploy the application from our development machine. (Note: this file has to be executable.)src/gateway/haproxy.cfg- the configuration file of our HAProxy gateway.issue-certificates- a script for generating certificates via Let’s Encrypt. (Note: this file has to be executable.)

hello/main.js- the source file of our NodeJS “Hello World” demo web app. (We could also place any other files related to the “hello”-service here, as we add more features to it.)

docker-compose.yml- the file that defines all Docker services.

The src/ folder contains the files that are needed on the server. During the deployment, we will mirror the entire src/ folder to the server. We can reproduce the complete server state from the src/ folder. The other files in the project root are for local development, documentation, or anything else.

On the server, we login under the root user2, and we use the /root folder as our remote working directory.

Deployment

Our deployment mechanism syncs up the contents of the local src/ folder to the remote /root/src location, and then restarts all Docker services.

We use the deploy helper script to trigger the deployment. For that to work, we need to adjust the SSH_HOST variable of that script, and make it match our domain:

readonly SSH_HOST="example.org"

In the same fashion as the deploy script, we can create other, arbitrarily sophisticated helper procedures, which allow us to conveniently inspect or manage the server state from our local dev machine.

Docker

Docker allows us to run each service as isolated process inside a well-defined environment. The services can optionally be configured to talk to each other via the Docker network. All our Docker containers are defined in the src/docker-compose.yml file.

version: "3.8"

services:

gateway:

image: "haproxy:2.9.0-alpine"

restart: "always"

depends_on:

- "hello"

volumes:

- "./gateway/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro"

- "/root/certs.pem:/etc/ssl/certs/ssl.pem:ro"

env_file:

- "/root/gateway.env"

ports:

- "443:443"

- "80:80"

hello:

image: "node:20.11.0-alpine3.18"

restart: "always"

hostname: "hello"

environment:

- "PORT=8080"

volumes:

- "./hello:/hello:ro"

command: "/hello/main.js"

ports:

- "8080:8080"

In our example, there are two services defined, which Docker runs as individual containers. The gateway container exposes port 80 and 443, which our external firewall makes available publicly.

- The

gatewayservice has to depend on all services that are specified in our HAProxy configuration, to ensure that Docker starts everything automatically and in the right order. HAProxy may fail to start otherwise. - The restart policies (on each service!) ensure that the docker daemon automatically tries to recover after failures. This also conveniently causes it to start all containers after system boot, by the way.

- The

/root/cert.pemand/root/gateway.envfiles will be provided later, during the initial server bootstrapping procedure.

HTTP Gateway

HAProxy is a powerful reverse proxy, which we use as HTTP gateway. It listens for incoming requests (on so-called “frontends”), and then dispatches them to the right services (“backends”) based on our custom routing rules. Apart from the dispatching, one important job for HAProxy is to terminate TLS, i.e., to take care of proper HTTPS transport encryption. HAProxy is configured by means of the src/gateway/haproxy.cfg file.

defaults

timeout client 30s

timeout server 30s

timeout connect 5s

frontend http

mode http

bind *:80

acl acl_acme path_beg /.well-known/acme-challenge

http-request redirect scheme https if !{ ssl_fc } !acl_acme

use_backend letsencrypt if acl_acme

frontend https

mode http

bind *:443 ssl crt /etc/ssl/certs/ssl.pem

use_backend hello if { hdr(host) -i hello.example.org }

backend letsencrypt

mode http

http-request return status 200 content-type "text/plain" lf-string "%[path,regsub(/.well-known/acme-challenge/,,g)].%[env(ACME_THUMBPRINT)]" if TRUE

backend hello

mode http

server hello hello:8080

This is what’s going on:

- The

httpfrontend redirects all incoming HTTP requests to HTTPS, except the ACME challenge requests from Let’s Encrypt, which are handled by theletsencryptbackend. - The

letsencryptbackend assembles the response for ACME challenge requests. These requests come from Let’s Encrypt, and are part of the standardised process for Let’s Encrypt to issue new certificates for us. The ACME response has to be constructed in a specific format, and it has to contain our ACME thumbprint. (More on that later.) - The

httpsfrontend dispatches incoming requests to their respective backend, based on their “host” property. - The

hellobackend binds all incoming requests to the localhellohostname on port8080, which is where ourhelloDocker service happens to listen. Therefore, the hostname + port combination must match the values of thehelloDocker service from thedocker-compose.ymlfile.

For extending the gateway with a new service, we add another use_backend directive to the https frontend block, and a new service-specific backend that is linked to the designated Docker container.

TLS certificates

We use Let’s Encrypt to provide TLS certificates for all (sub-)domains that we want to use. The process for obtaining the certificates is encoded in the src/gateway/issue-certificates script, which is supposed to be executed on the server.

The script contains a DOMAINS bash array, where we need to maintain a list of all (sub-)domains that we want to request certificates for.

readonly DOMAINS=(

"hello.example.org"

)

The script relies on the acme.sh tool internally to communicate with the Let’s Encrypt servers. The acme.sh tool maintains a bunch of internal state at /root/.acme.sh, which we don’t have to bother about, though.

What’s important for us is that the issue-certificates script populates the eventual certificate at /root/certs.pem, where HAProxy can pick it up. Whenever we add a new domain to our list3, we have to re-issue the certificates. The deploy script can do this automatically at deploy time, if we call it with the --issue-certificates flag:

./deploy --issue-certificates

Bootstrapping the server

In order to get started on a fresh server, we need to carry out an initial bootstrapping procedure.

As preparation, we have to install a Linux distribution of our choice, and then get the basic networking configuration in order.

- Configure the external firewall to allow inbound traffic on these 3 ports:

- 22 (SSH)

- 80 (HTTP)

- 443 (HTTPS)

- Set up DNS records to make our domain(s) point to our server. In our case, we’d create an A/AAAA-type record for

hello.example.org, and make it resolve to the IP address of our server. - Provision public SSH key to the server, so that we can log in via the terminal.

- Adjust local SSH config so that we can conveniently do

ssh example.orgwithout further ado.

After that’s done, we can log in via SSH to carry out the bootstrapping steps on the terminal.

1. Install dependencies

We SSH into the server to install the following two tools via the CLI:4

- Docker

- Docker’s install procedure depends on the Linux distribution that you use on your server.

- acme.sh

- Since

acme.shis basically just a single shell script, you can download it as is from the repository onto your server, make it executable, and copy it somewhere inside your$PATH.

- Since

Afterwards, the docker and acme.sh commands should be globally available on the server.

Of course, we can install any other additional tools that may make our lives easier, such as our preferred CLI editor, or other debugging or system utilities.

2. Set up Let’s Encrypt

On the server, we use the acme.sh tool to register an account with Let’s Encrypt.

acme.sh --register-account --server letsencrypt

This command outputs a thumbprint value, which we have to store as environment variable in the /root/gateway.env file, so that the HAProxy gateway can read it.

echo 'ACME_THUMBPRINT=12345' > /root/gateway.env

(We must replace 12345 with the real thumbprint value.)

3. Initial deployment

From our local machine, we perform a deployment in order to transfer all source files to the server. The deploy script contains routines for both the initial bootstrapping procedure, and for the certificate generation, so we can take care of that in a single step.

./deploy --bootstrap --issue-certificates

After running this command on our local machine, our server should be up and running.

For all subsequent deploys, we use ./deploy, or, in case we had updated the DOMAINS array, ./deploy --issue-certificates.

We only use the --bootstrap flag once per machine. After the bootstrapping is completed, we don’t need this flag anymore. It takes care of the following things:

- For starting the HTTP gateway, we have a bit of a chicken-and-egg situation5: HAProxy refuses to start without a valid TLS certificate, but we can’t obtain a certificate from Let’s Encrypt without a web server that would respond to the ACME challenge requests. Our solution is to generate a self-signed dummy certificate initially, with the sole purpose of satisfying HAProxy. That dummy certificate will only live for a few seconds, until it’s replaced by the real one.

- Let’s Encrypt certificates are intentionally short-lived (3 months), in order to encourage people to set up an automated renewal mechanism. Therefore, we enable a periodic cronjob, which runs the

issue-certificatesscript automatically once a month.

Adding new services

Whenever we want to add a new service, we need to take care of the following steps:

- Create a DNS record at our DNS provider.

- Add the domain to the

DOMAINSarray inissue-certificates. - Define a Docker service in

docker-compose.yml(and, if need be, add all necessary service files to the project). - Add a new backend block to the

haproxy.cfggateway configuration, and reference it from thehttpsfrontend. - Deploy by executing the

./deploy --issue-certificatescommand on your local dev machine.

-

Hint: click on the file names to view them. ↩︎

-

We use the

rootuser here mainly for simplicity, but this has security risks. Docker also supports rootless mode. ↩︎ -

Keep in mind that Let’s Encrypt servers may enfore rate limits, so especially for testing you should resort to their testing servers. ↩︎

-

We could also save the installation commands as a script, but that may not be portable across Linux distributions. ↩︎

-

An alternative solution to the ACME dilemma would be to temporarily deactivate (comment out) the

httpsfrontend inhaproxy.cfg, but we can’t trivially automate that. ↩︎